“The first casualty of war is the truth.”

This phrase, often attributed to U.S. Senator Hiram Johnson (1917), remains strikingly relevant today. Because in times of conflict, information becomes a strategic weapon, as powerful as tanks or drones.

Why do nations at war manipulate reality? To rally the undecided, maintain mobilization, and sow doubt among the enemy. But this dangerous game can weaken two essential pillars of any democracy: freedom of the press and freedom of expression.

War is Also Fought in the Mind

Modern wars are no longer fought only on the battlefield: they are also waged on our screens. Controlling information means controlling opinions, weakening the enemy’s morale, justifying one’s actions, or mobilizing the population.

This is what is called information warfare, or cognitive warfare: a battlefield where propaganda, disinformation, emotional manipulation, and narrative control intersect.

➡️ During the war in Ukraine, Russia massively disseminated narratives reversing roles or denying war crimes.

Techniques of Propaganda

🔎 Propaganda does not necessarily tell outright lies. It selects, distorts, simplifies, or repeats certain messages endlessly to influence emotions and behaviors.

Belgian historian Anne Morelli identified in 2001 ten elementary principles of war propaganda. These are recurring patterns, regardless of the conflict or side involved. Among them:

→ We do not want war, but the adversary is solely responsible.

→ The enemy’s leader is a monster, a madman, or a criminal.

→ Our cause is noble; the adversary acts out of self-interest or barbarism.

→ Artists, intellectuals, and journalists support our cause.

→ Anyone who doubts or criticizes is a traitor.

These principles help simplify the complexity of conflicts, mobilize public opinion, and justify the use of force.

Here are 6 commonly used techniques to convey these messages.

1. Atrocity Propaganda: Criminalizing and Dehumanizing the Enemy

One of the oldest tricks: attributing barbaric acts to the enemy to portray them as a monster. This allows one to justify all reprisals, without debate or nuance, and prevent any form of empathy.

👉 Ex.: In 1914, British propaganda accused Germans of cutting off Belgian children’s hands. The entirely fabricated story was widely disseminated in American newspapers to push the U.S. to join the war.

👉 Ex.: In 1990, a young Kuwaiti girl testified before the U.S. Congress that Iraqi soldiers had removed babies from incubators. This was a lie orchestrated by a PR agency — yet it helped justify military intervention against Saddam Hussein.

👉 Ex.: In October 2023, Israel’s Defense Minister referred to Palestinians as “human animals.” This rhetoric prepares public opinion to accept extreme violence.

2. Appeal to Emotion

Fear, anger, compassion… Emotion is used to bypass critical thinking. Shocking images or moving testimonies are replayed constantly to trigger immediate reactions.

⚠️ The stronger the emotion, the less time we take to analyze. It is a powerful lever of manipulation.

3. The Repetition Effect

A lie repeated a thousand times… becomes credible. The more we are repeatedly exposed to information — even false — the more our brains tend to believe it true. This is called the illusion of truth.

👉 See also our resource: “The Illusion of Truth”

4. Framing and Euphemistic Language

The words chosen shape our perception. One doesn’t necessarily lie about facts, but about their presentation. Framing allows one to steer interpretation without outright lying.

👉 Ex.: Saying we are “striking terrorist targets” versus “bombing civilians” tells very different stories.

This also relies on euphemistic language: it makes violence more acceptable — even invisible. Military or technocratic vocabulary creates emotional distance.

👉 Ex.: Speaking of “collateral damage,” “surgical strikes,” or “neutralizing targets” is to sterilize the reality of war. We forget that behind these words often lie killed civilians, destroyed homes, broken lives.

5. Visual Usurpation

Photos out of context, recycled old videos, manipulated edits, AI-generated images (deepfakes)… In war, images can lie as effectively as words.

👉 Ex.: TikTok videos claiming to show recent massacres turn out to be footage from video games, films, or past conflicts.

👉 Ex.: In Ukraine, deepfakes were used to falsely depict military officials surrendering.

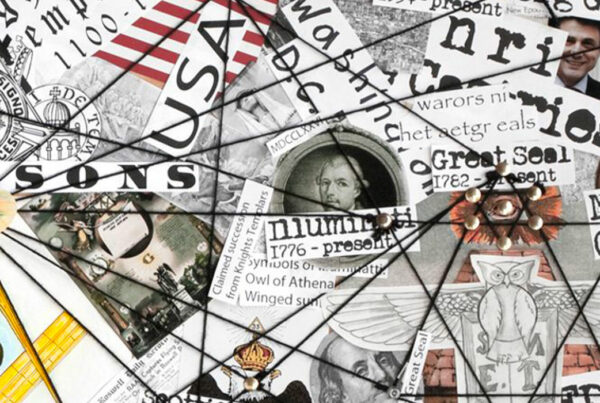

- Maskirovka: The Art of Deceiving the Enemy

This Russian term, which can be translated as “camouflage,” refers to military disinformation designed to deceive the adversary. During World War II, the British operation Mincemeat convinced the Germans that the Allies would land in Sardinia rather than Sicily.

Since then, this strategy has evolved with information technologies. During the Gulf War (1991), the concept of “information dominance” or “network-centric warfare” emerged: the strategic mastery of information to win without necessarily firing a shot.

Today, Russia and China wage prolonged information wars, with disinformation campaigns aimed at weakening enemy societies over the long term.

A “Total War” of Information on the Digital Battlefield

With the internet, wartime disinformation takes on an entirely new dimension: it becomes instantaneous, viral, global.

Facebook, Twitter, TikTok, or Telegram are no longer mere platforms: they are massive influence battlegrounds. In 2022, Meta dismantled a Russian influence operation aimed at discrediting Ukraine, making the country appear corrupt and weak, and justifying the invasion. The goal? To create a false narrative, sow doubt, and legitimize war.

Some authoritarian regimes have taken this logic even further. Russia and China deploy agents infiltrated into media, data collection companies, and psychological profiling techniques to identify individuals most vulnerable to disinformation.

The Cambridge Analytica Scandal: When Our Data Is Used to Manipulate UsIn 2018, it was revealed that the company Cambridge Analytica had exploited the Facebook data of 87 million people without their knowledge. Goal? To influence major votes such as Brexit or the election of Donald Trump. How? Using personality quizzes on Facebook, the company collected personal data (including friends’ data) and built detailed psychological profiles. It then targeted each individual with customized political ads, designed to exploit their fears or beliefs. 👉 A scandal that exposed the abuses of massive personal data collection… and its power to manipulate. |

Propaganda doesn’t always shout “I’m lying”: it whispers what we want to hear. In wartime, information is a battlefield. To survive it, we must learn to recognize the traps and keep our critical thinking sharp. We must think of disinformation as a virus — and strengthen our collective immune system.

🔗 Sources

- BBC – Disinformation and war

- The Atlantic Council – Digital Forensic Research Lab

- Institut national de l’audiovisuel – “La propagande de guerre”

- EUvsDisinfo – EU site countering pro-Kremlin disinformation

How to Regain Control?

The solution is not to avoid information, but to learn to consume it better:

🔍 1. Read Beyond Headlines

Don’t stop at punchlines: read multiple sources, cross-reference viewpoints, seek context. Headlines are often designed to attract — not to explain.

🛑 2. Step Back

If a piece of information triggers a strong emotion (anger, fear, outrage), ask yourself: why this reaction? Is the information biased, distorted, manipulated? A good reflex: seek a reliable source to reframe the facts.

⏳ 3. Disconnect to Understand Better

Consuming less information, but better, also means being clearer about the world around us. Some researchers even speak of a “media diet”: reducing noise to better hear essential signals.

🔗 Sources

Key Takeaways

✔️ An image can be striking… but completely false.

✔️ There are indicators to spot visuals created by AI (strange details, unrealistic faces, visual inconsistencies).

✔️ Online tools can help, but your critical thinking remains the best detector!

✔️ Never forget to verify the source, seek corroboration elsewhere, and think twice before sharing.